To get the best out of any interaction with generative AI, you need to communicate your needs effectively. This crucial skill is called prompt engineering.

As I work with AI several times a week, and this is likely to increase as AI becomes more embedded into my systems and work, I’ve been keen to develop this skill. This guide is aimed at beginners who want to improve their ability to communicate their requirements effectively with generative AI.

What Exactly is Prompt Engineering?

If you’ve already used a Large Language Model (LLM), you’ll know that you need to start the conversation by prompting it for a response. This initial prompt, and any subsequent prompts you make, are collectively known as prompts.

Prompt engineering is the process of creating and optimising prompts for effective interactions with Large Language Models. It requires a blend of creativity and an understanding of the LLM’s capabilities.

The Importance of Context: Understanding Contextual Prompting

Contextual prompting in prompt engineering involves providing context to the Large Language Model within your prompt. This context helps the LLM successfully complete its task.

Examples of providing context include:

- Useful background information on the task at hand.

- Details on the expected output. For example, as I live in England, I prefer outputs in GB English.

- The desired tone; you might want it to provide critical feedback at times.

My Personal Journey: How I Learned Prompt Engineering

As I’ve mentioned in some previous posts, when I first tried out the initial release of OpenAI’s ChatGPT, I knew we were at the dawn of what was likely to be the next technological revolution – AI had become real.

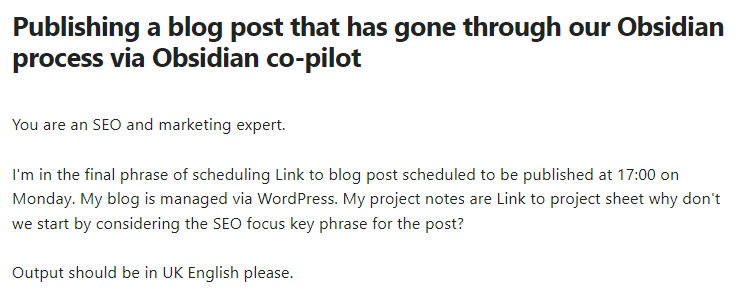

So, I started to read more about AI and kept an eye on how the models were developing by regularly using various models. In early 2024, I began experimenting with AI community plugins in Obsidian, firstly with the Smart Connections plugin and more recently with the Obsidian Copilot plugin.

In Spring 2025, I decided to pay for the Obsidian Copilot plugin. As I used it more, I realised that to get the most out of the community plugin and more general models, I should look to learn more about prompt engineering.

Having already done some studying of AI, I knew I’d picked up some knowledge. So, I asked Obsidian Copilot to review my vault and identify what I already knew about prompt engineering and where my gaps were.

This feedback formed the context for my next question, which I asked Obsidian Copilot and most of the other popular general models: to create a study plan with recommended sources to help me target my learning and fill those gaps.

Over the last few months, I’ve read the material, taken highlights, and added notes to my personal knowledge management system. But I also tried to practice what I had learned. I would recommend that you do the same.

Practical Steps to Get Started

Here are some practical ideas to help you begin:

- Focus on Clarity: Explain what you can as clearly as possible. If you can’t clarify your thoughts enough, continue, but consider it an exploratory conversation to help your own clarity.

- Be Specific: Explain what you want to get out of the conversation. It can also be helpful to explain why and, in some cases, how you want it.

- Provide Context: Supply all information relevant to your conversation; this context helps the AI model to understand your requirements.

- Experiment and Iterate: Keep experimenting with your prompts and even with the models themselves. At times, it can be helpful to run the same prompt in different models and follow up with the one that gave you the best answer, if needed.

- Keep Learning: Large Language Models and generative AI are developing quickly at the moment, with new models, related technology, and new prompting techniques emerging.

Conclusion

After reading this introductory guide to help you get started, I’ve linked to both the OpenAI and Google prompt engineering guides, and I would recommend you read them both, whatever model you decide to use.

If you would like me to write a guide on some of the more complex prompt engineering concepts, such as chain of thought, please leave a comment below.

Further Reading

- Prompt engineering best practice for ChatGPT: A best practice guide written by OpenAI for their own LLM, ChatGPT.

- Google Prompt Engineering Guide: Google’s own prompt engineering manual.

- Introductory Guide to Large Language Models: My introductory guide to language models.

- Demystifying Generative AI: My Journey from Novice to Understanding: My introductory guide to generative AI.